If you found value in this post, consider following me on X @davidpuplava for more valuable information about Game Dev, OrchardCore, C#/.NET and other topics.

AI is moving at lighting speed.

Things change in a matter of days or weeks not months.

As a pet project, I created my own chat assistant application utilizing Microsoft technologies and Ollama for local LLM model chats.

I created it a few months ago when a number of the nuget packages were in preview. Today I went to update the packages and most of several minor versions ahead.

I blindly updated to the latest versions of the packages and now I have build errors.

The first issue was with a project that references Microsoft.Extensions.AI.Ollama, specifically the 9.7.0-preview.1.25356.2 which is deprecated and Microsoft recommends using OllamaSharp as an alternative.

Went ahead and uninstalled Microsoft.Extensions.AI.Ollama package and installed OllamaSharp, but now I have build errors.

Checked the OllamaSharp documentation on GitHub here to see I needs to change a few things.

The client instantiation type changes from OllamaChatClient to OllamaApiClient.

//IChatClient summarizeClient = new OllamaChatClient(new Uri("http://localhost:11435/"));

IChatClient summarizeClient = new OllamaApiClient(new Uri("http://localhost:11435/"));

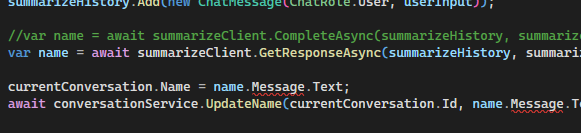

Then, the method call to get the chat completion changed from CompleteAsync to `

//var name = await summarizeClient.CompleteAsync(summarizeHistory, summarizeChatOptions, cancellationToken: responseCancellationToken);

var name = await summarizeClient.GetResponseAsync(summarizeHistory, summarizeChatOptions, cancellationToken: responseCancellationToken);

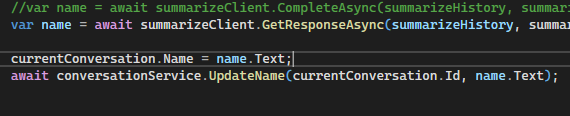

The next compiler error is related to the Chat completion itself.

Fix by accessing the .Text property on the response itself rather than going through the .Message property.

//currentConversation.Name = name.Message.Text;

currentConversation.Name = name.Text;

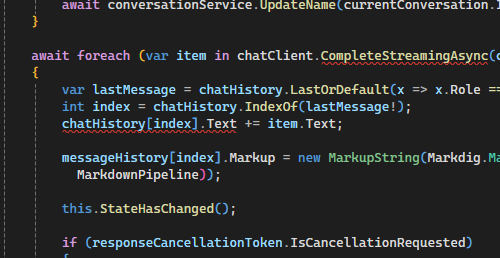

Of course, the most important change being to the streaming responses itself to replace .CompleteStreamingAsync(...) method.

//await foreach (var item in chatClient.CompleteStreamingAsync(chatHistory, chatOptions, cancellationToken: responseCancellationToken))

await foreach (var item in chatClient.GetStreamingResponseAsync(chatHistory, chatOptions, cancellationToken: responseCancellationToken))

So far, so good - but the problem is with assign to the .Text property of the item in that list. For this particular implementation, it happens to be a ChatMessage type from the Microsoft.Extensions.AI.Abstractions library.

The .Text property used to be writable but now it is readonly.

Turns out that this code is a relic of a prior design that is no longer necessary. The only purpose for appending to the .Text property was so that I could use it to construct the MarkupString on the next line.

Analyzing the code even more, I am constant querying my dataStructures looking for the latest Assistant message to get the index simply to align with the index of the messageHistory array.

That is not necessary now. So let's refactor.

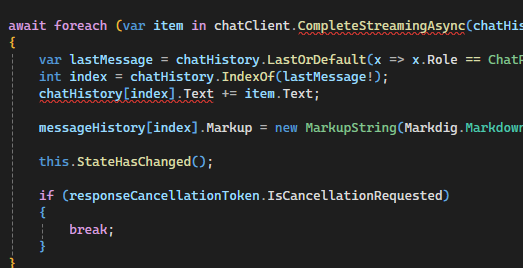

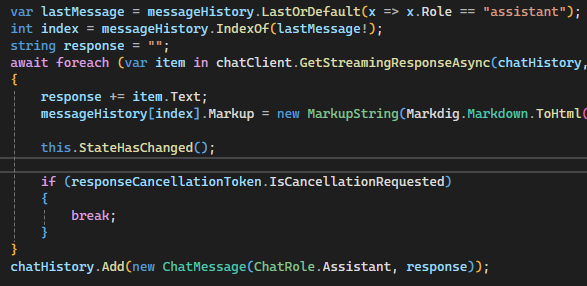

var lastMessage = messageHistory.LastOrDefault(x => x.Role == "assistant");

int index = messageHistory.IndexOf(lastMessage!);

string response = "";

await foreach (var item in chatClient.GetStreamingResponseAsync(chatHistory, chatOptions, cancellationToken: responseCancellationToken))

{

response += item.Text;

messageHistory[index].Markup = new MarkupString(Markdig.Markdown.ToHtml(markdown: response, pipeline: MarkdownPipeline));

this.StateHasChanged();

if (responseCancellationToken.IsCancellationRequested)

{

break;

}

}

chatHistory.Add(new ChatMessage(ChatRole.Assistant, response));

First, you'll see the index finding logic outside of the await foreach, and instead of using the chatHistory array, let's use the messageHistory array because that's what we care about updating.

Next, you'll see we use a simple string for concatenating the reponse which is then used to construct the MarkupString.

Lastly, the chatHistory array is updated with a new ChatMessage object after the reponse has completed streaming. This is what allows us to avoid trying to write to the ChatMessage's .Text property.

It is also more aligned with how Microsoft has documented that you add a ChatMessage to history.

After these changes, I was able to run the application and it worked as it did before.

Interestingly enough, there is a noticable speed up which may have been from avoiding the repeated steps to look up the index.

Until next time.