If you found value in this post, consider following me on X @davidpuplava for more valuable information about Game Dev, OrchardCore, C#/.NET and other topics.

Goal

In my last post, I had an existing web application that I wanted to add .NET Aspire to help with orchestrating external dependencies.

In particular, my web applicaiton is a LLM chat application that relies on Ollama for chat completions.

For all intents and purposes, Ollama is it's own microservice that my web application consumes and communicates with through it's REST API.

In this post, I show how I can add Ollama to my solution so that my web application has a reliable and consistent developer experience.

From Last Post

Here is a quick recap of the last post. If you don't need a refresher, skip to the next section.

To my existing ASP.NET MVC Web application solution, I:

- added a .NET Aspire AppHost project using the project template

- added a .NET Aspire ServiceDefaults project using the project template

- configured my web applicaiton to use

builder.AddServiceDefaults(); - added

httpandhttpsentries to mylaunchSettings.jsonfile in web application

For specific details, please check out my last post here.

Manually Running Ollama

For chat completions, my web application relies on Ollama server running and configured to serve up a local LLM model.

In particular, my web application uses the OllamaApiClient type for the OllamaSharp library to communicate (see GitHub for it here) with an Ollama server over HTTP.

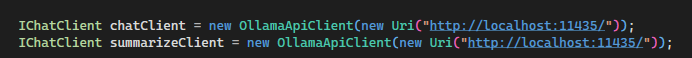

The code for creating the client is here.

IChatClient chatClient = new OllamaApiClient(new Uri("http://localhost:11435/"));

IChatClient summarizeClient = new OllamaApiClient(new Uri("http://localhost:11435/"));

For this web application, I use separate clients for chat completion and summarizing. The summaryizeClient is used to construct a title for new chat conversations.

Check out this other post of mine that discusses changing my web application from using the Microsoft.Extensions.AI.Ollama library to using OllamaSharp.

As you can see, an API client is instantiated for Ollama running at the following REST api address http://localhost:11435.

Note the default port for Ollama's REST API is 11434 but here I use 11435 for reasons you'll see shortly

To get this to work, I would have to ensure that the Ollama server is running on my machine and that the underlying model was pulled.

Sometimes, I'd forget and get a runtime error when trying to use my chat application.

The better way is to let .NET Aspire run Ollama for us.

Configure .NET Aspire to Run Ollama

With .NET Aspire part of my web application solution, I can add Ollama as a dependent resource as follows.

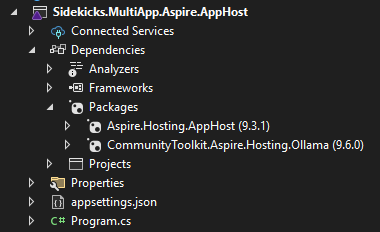

To my AppHost project, I add the CommunityToolkit.Aspire.Hosting.Ollama Nuget package.

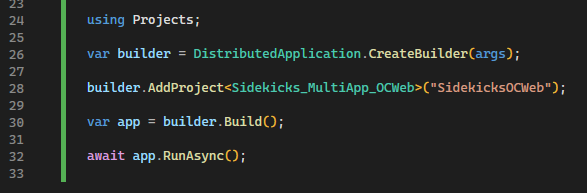

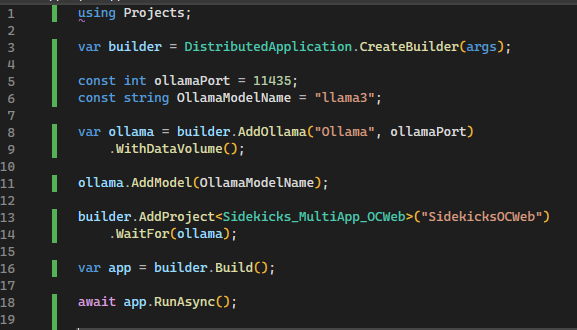

In AppHost project, this is the current state of the Program.cs file.

In AppHost project, I can then modify the Program.cs file to add Ollama as a resource.

const int ollamaPort = 11435;

const string OllamaModelName = "llama3";

var ollama = builder.AddOllama("Ollama", ollamaPort)

.WithDataVolume();

ollama.AddModel(OllamaModelName);

This code defines constants for a custom Ollama port and specific model that Ollama should use.

It then chains together a call to .AddOllama(...) extensions method (from the CommunityToolkit library) to add the resource with that custom port and data volume.

And lastly, the code calls .AddModel(...) to tell the Ollama resource to use llama3.

The AppHost Program.cs file looks like this.

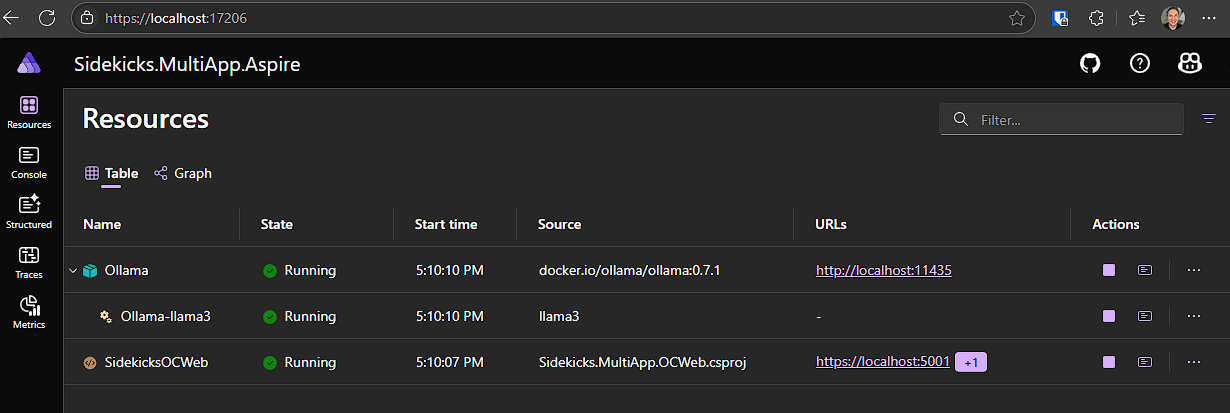

You can now run the application and see that the Ollama server resource along with the llama3 model are available in the resource dashboard.

One last bit here, is to add a call to .WaitFor(...) on the ollama resource so that my web application will wait until Ollama is available before it starts up. This ensures that no race conditions happen where the my web application is ready but Ollama is still starting up in the backgroun.

builder.AddProject<Sidekicks_MultiApp_OCWeb>("SidekicksOCWeb")

.WaitFor(ollama);

Next Steps

So far eveything looks good, but the application would fail if I deployed to server because my web application is hardcoded to use localhost when calling Ollama.

.NET Aspire provides a way to avoid hard coding this reference which I will post about next time.

Until then, keep coding.